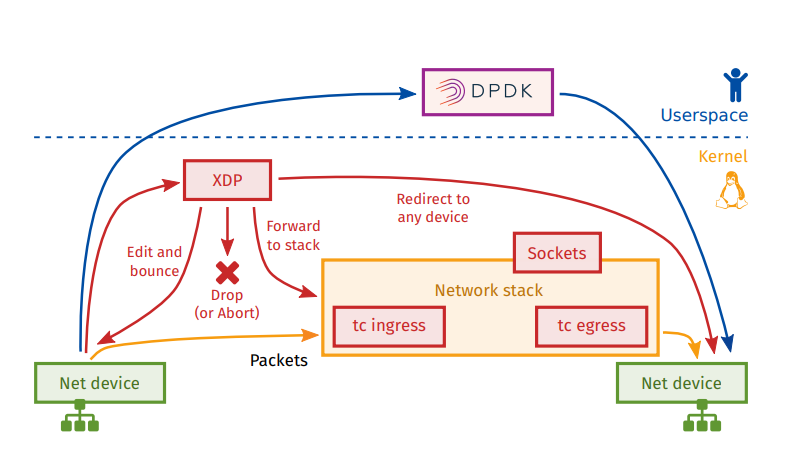

XDP

Introduced in Linux 4.8 eBPF hook at the driver level (ingress)

Intercept packet before it reaches the stack, before allocating sk_buff

Rationale: implement a faster data path which is part of the kernel, maintained by the kernel community Rather for simple use cases.

Complex processing: forward to stack Not a “kernel bypass”, works in cooperation with the networking stack

Essentially, user-space networking achieves high-speed performance by moving packet-processing out of the kernel’s realm into user-space.

XDP does in fact the opposite: it moves user-space networking programs (filters, mappers, routing, etc) into the kernel’s realm.

XDP allows us to execute our network function as soon as a packet hits the NIC, and before it starts moving upwards into the

kernel’s networking layer, which results into a significant increase of packet-processing speed

Accelerating-VM-Networking-through-XDP_Jason-Wang.pdf

https://help.netronome.com/support/solutions/articles/36000050009-agilio-ebpf-2-0-6-extended-berkeley-packet-filter

https://www.netronome.com/blog/hello-xdp_drop/

https://archive.fosdem.org/2018/schedule/event/xdp/attachments/slides/2220/export/events/attachments/xdp/slides/2220/fosdem18_SdN_NFV_qmonnet_XDPoffload.pdf

XDP MODES

In total, XDP supports three operation modes which iproute2 implements as well: xdpdrv, xdpoffload and xdpgeneric.

xdpdrv stands for native XDP, meaning the BPF program is run directly in the driver’s receive path at the earliest possible point in software.

This is the normal / conventional XDP mode and requires driver’s to implement XDP support, which all major 10G/40G/+ networking drivers

in the upstream Linux kernel already provide.

xdpgeneric stands for generic XDP and is intended as an experimental test bed for drivers which do not yet support native XDP.

Given the generic XDP hook in the ingress path comes at a much later point in time when the packet already enters the stack’s

main receive path as a skb, the performance is significantly less than with processing in xdpdrv mode.

xdpgeneric therefore is for the most part only interesting for experimenting, less for production environments.

xdpoffload Last but not least, thIs mode is implemented by SmartNICs such as those supported by Netronome’s nfp driver and

allow for offloading the entire BPF/XDP program into hardware, thus the program is run on each packet reception directly

on the card. This provides even higher performance than running in native XDP although not all BPF map types or BPF helper

functions are available for use compared to native XDP. The BPF verifier will reject the program in such case and report

to the user what is unsupported. Other than staying in the realm of supported BPF features and helper functions,

no special precautions have to be taken when writing BPF C programs.

#include <linux/bpf.h>

int main()

{

return XDP_DROP;

}

clang -target bpf -O2 -c xdp.c -o xdp.o

ip -force link set dev ens1f0 xdpdrv obj xdp.o sec .text

ip link show ens1f0

32: ens1f0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 xdp qdisc mq state UP mode DEFAULT group default qlen 1000

link/ether f4:e9:d4:ed:25:38 brd ff:ff:ff:ff:ff:ff

prog/xdp id 36

0 XDP_ABORTED - Error, Block the packet

1 XDP_DROP - Block the packet

2 XDP_PASS - Allow the packet to continue up the kernel

3 XDP_TX - Bounce the packet back in the direction it came from

$ hping3 [IP Address of Host]

Traffic can be monitored using tcpdump, however it will show that no packets are received.

This is due to XDP dropping packets at the start of the kernel path, before the packets can reach tcpdump

unload xdp

ip link set dev [DEV] xdpdrv off

H/w offload load

ip -force link set dev ens1f0 xdpoffload obj xdp.o sec .text

Testing XDP

Steps

Description

Step 1

Check "clang" is installed or not else install it by yum install clang and XDP only supports on RHEL8 and above kernel

Step 2

Create xdp_drop.c file in "/usr/src/kernels/$(uname -r)/net/xdp" directory

touch /usr/src/kernels/$(uname -r)/net/xdp/xdp_drop.c

Step 3

Write xdp_drop code inside xdp_drop.c file

#include <linux/bpf.h>

#ifndef __section

# define __section(NAME) \

__attribute__((section(NAME), used))

#endif

__section("prog")

int xdp_drop(struct xdp_md *ctx)

{

return XDP_DROP;

}

char __license[] __section("license") = "GPL";

Step 4

Compile this code with below command so that it will create obj file

clang -O2 -Wall -target bpf -c xdp_drop.c -o xdp_drop.o

Step 5

Insert/Probe xdp_drop.o file to both interface (PF) with below command

ip link set dev ens3f0 xdp obj xdp_drop.o

ip link set dev ens3f1 xdp obj xdp_drop.o

Step 6

With "ip link show" command check xdp loaded with some id.

[root@Gen9-XDP-Host-RHEL8 xdp]# ip link show

4: ens3f0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 xdp qdisc mq state UP mode DEFAULT group default qlen 1000

link/ether 00:0e:1e:d6:62:fc brd ff:ff:ff:ff:ff:ff

prog/xdp id 1 tag f95672269956c10d jited

5: ens3f1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 xdp qdisc mq state UP mode DEFAULT group default qlen 1000

link/ether 00:0e:1e:d6:62:fd brd ff:ff:ff:ff:ff:ff

prog/xdp id 2 tag f95672269956c10d jited

Step 7

Send Traffic through scapy tool from Peer system to both the interface simultaneously

sendp (Ether(src="00:0e:1e:d6:62:fc",dst="14:02:ec:d3:af:0a")/IP(src="44.44.44.1",dst="55.55.55.1")/TCP(sport=0xbbbb,dport=0xaaaa)/("x"*200), iface="ens3f0",count=1000000)

sendp (Ether(src="00:0e:1e:d6:62:fd",dst="14:02:ec:d3:af:0b")/IP(src="44.44.44.1",dst="55.55.55.1")/TCP(sport=0xbbbb,dport=0xaaaa)/("x"*200), iface="ens3f1",count=1000000)

1. Observed that packets were being dropped and “xdp_no_pass” counters were increasing, No packets were seen in tcpdump that suggest that Xpress data path was being used

[root@Gen9-XDP-Host-RHEL8 xdp]# ethtool -S ens3f0 | grep xdp

0: xdp_no_pass: 5000

1: xdp_no_pass: 3731

2: xdp_no_pass: 5000

3: xdp_no_pass: 4000

4: xdp_no_pass: 4609

5: xdp_no_pass: 5000

6: xdp_no_pass: 4000

7: xdp_no_pass: 5000

2. You should not see any unexpected failures in dmesg or /var/log/messages

3. Should not see any driver/FW failure messages or system hang.

Loading IN NATIVE MODE

# ip -force link set dev em1 xdpdrv obj prog.o

# ip link show

[...]

6: em1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 xdp qdisc mq state UP mode DORMANT group default qlen 1000

link/ether be:08:4d:b6:85:65 brd ff:ff:ff:ff:ff:ff

prog/xdp id 1 tag 57cd311f2e27366b

[...]

# ip link set dev em1 xdpdrv off

The option verb can be appended for loading programs in order to dump the verifier log,

# ip -force link set dev em1 xdpdrv obj prog.o verb

LOADING IN GENERIC MODE

# ip -force link set dev em1 xdpgeneric obj prog.o

# ip link show

[...]

6: em1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 xdpgeneric qdisc mq state UP mode DORMANT group default qlen 1000

link/ether be:08:4d:b6:85:65 brd ff:ff:ff:ff:ff:ff

prog/xdp id 4 tag 57cd311f2e27366b <-- BPF program ID 4

[...]

# bpftool prog dump xlated id 4 <-- Dump of instructions running on em1

0: (b7) r0 = 1

1: (95) exit

# ip link set dev em1 xdpgeneric off

XDP Related Config

==================

CONFIG_CGROUP_BPF=y

CONFIG_BPF=y

CONFIG_BPF_SYSCALL=y

CONFIG_NET_SCH_INGRESS=m

CONFIG_NET_CLS_BPF=m

CONFIG_NET_CLS_ACT=y

CONFIG_BPF_JIT=y

CONFIG_LWTUNNEL_BPF=y

CONFIG_HAVE_EBPF_JIT=y

CONFIG_BPF_EVENTS=y

CONFIG_TEST_BPF=m

CONFIG_XDP_SOCKETS=y

$ cd tools/testing/selftests/bpf/

$ make

$ sudo ./test_verifier

Sample COde to drop IP Traffic from 50.50.50.1

#include "../../include/uapi/linux/bpf.h"

#include "../../include/uapi/linux/if_ether.h"

#include "../../include/uapi/linux/if_packet.h"

#include "../../include/uapi/linux/ip.h"

#include "../../include/uapi/linux/in.h"

#include "../../include/uapi/linux/tcp.h"

#include "../../include/uapi/linux/udp.h"

//#include "bpf_helpers.h"

#ifndef __section

# define __section(NAME) \

__attribute__((section(NAME), used))

#endif__section("prog")

//https://www.vultr.com/resources/ipv4-converter/?ip_address=50.50.50.1

//842150401

int xdp_drop(struct xdp_md *ctx)

{

void *data_end = (void *)(long)ctx->data_end;

void *data = (void *)(long)ctx->data;

struct ethhdr *eth = data; if (eth + 1 > data_end) {

return XDP_PASS;

} struct iphdr *iph = data + sizeof(struct ethhdr); if (iph + 1 > data_end) {

return XDP_PASS;

}

unsigned int ip_src = iph->saddr;

//printf("%ld\n",htonl(842150401)); network byte order conversion for

//50.50.50.1

if(ip_src == 20066866)

{

return XDP_DROP;

} return XDP_PASS;

}

Good Links

https://docs.cilium.io/en/latest/bpf/

https://medium.com/@fntlnz/load-xdp-programs-using-the-ip-iproute2-command-502043898263

https://qmonnet.github.io/whirl-offload/2016/09/01/dive-into-bpf